31 ändrade filer med 522 tillägg och 81 borttagningar

+ 60

- 44

README.md

Visa fil

| @@ -1,39 +1,50 @@ | |||

| # 简介 | |||

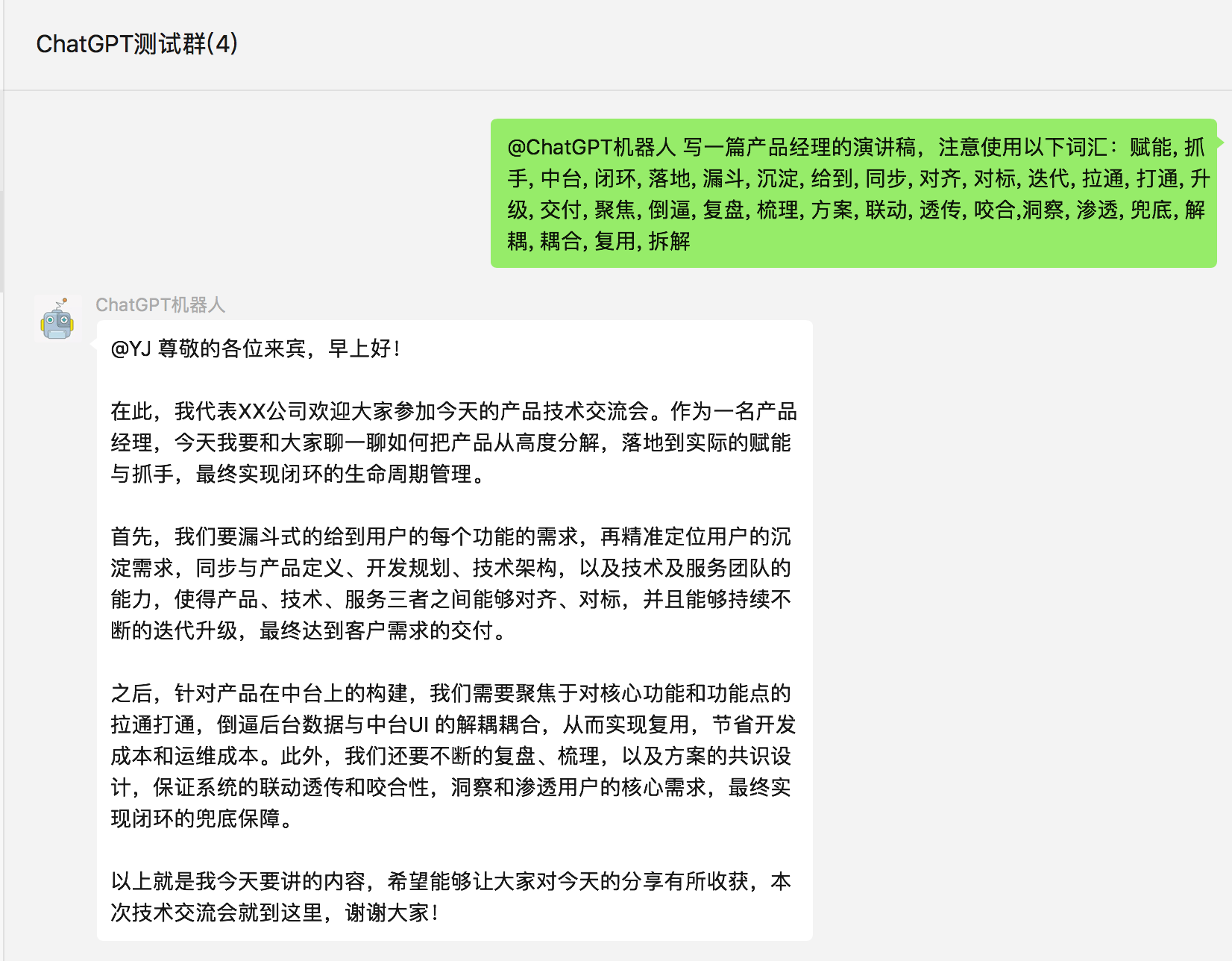

| > 本项目是基于大模型的智能对话机器人,支持企业微信、微信公众号、飞书、钉钉接入,可选择GPT3.5/GPT4.0/Claude/文心一言/讯飞星火/通义千问/Gemini/LinkAI/ZhipuAI,能处理文本、语音和图片,通过插件访问操作系统和互联网等外部资源,支持基于自有知识库定制企业AI应用。 | |||

| > chatgpt-on-wechat(简称CoW)项目是基于大模型的智能对话机器人,支持微信公众号、企业微信应用、飞书、钉钉接入,可选择GPT3.5/GPT4.0/Claude/Gemini/LinkAI/ChatGLM/KIMI/文心一言/讯飞星火/通义千问/LinkAI,能处理文本、语音和图片,通过插件访问操作系统和互联网等外部资源,支持基于自有知识库定制企业AI应用。 | |||

| 最新版本支持的功能如下: | |||

| - [x] **多端部署:** 有多种部署方式可选择且功能完备,目前已支持微信生态下公众号、企业微信应用、飞书、钉钉等部署方式 | |||

| - [x] **基础对话:** 私聊及群聊的消息智能回复,支持多轮会话上下文记忆,支持 GPT-3.5, GPT-4, Claude-3, Gemini, 文心一言, 讯飞星火, 通义千问,ChatGLM-4 | |||

| - [x] **语音能力:** 可识别语音消息,通过文字或语音回复,支持 azure, baidu, google, openai(whisper/tts) 等多种语音模型 | |||

| - [x] **图像能力:** 支持图片生成、图片识别、图生图(如照片修复),可选择 Dall-E-3, stable diffusion, replicate, midjourney, CogView-3, vision模型 | |||

| - [x] **丰富插件:** 支持个性化插件扩展,已实现多角色切换、文字冒险、敏感词过滤、聊天记录总结、文档总结和对话、联网搜索等插件 | |||

| - [x] **知识库:** 通过上传知识库文件自定义专属机器人,可作为数字分身、智能客服、私域助手使用,基于 [LinkAI](https://link-ai.tech) 实现 | |||

| - ✅ **多端部署:** 有多种部署方式可选择且功能完备,目前已支持微信公众号、企业微信应用、飞书、钉钉等部署方式 | |||

| - ✅ **基础对话:** 私聊及群聊的消息智能回复,支持多轮会话上下文记忆,支持 GPT-3.5, GPT-4, GPT-4o, Claude-3, Gemini, 文心一言, 讯飞星火, 通义千问,ChatGLM-4,Kimi(月之暗面) | |||

| - ✅ **语音能力:** 可识别语音消息,通过文字或语音回复,支持 azure, baidu, google, openai(whisper/tts) 等多种语音模型 | |||

| - ✅ **图像能力:** 支持图片生成、图片识别、图生图(如照片修复),可选择 Dall-E-3, stable diffusion, replicate, midjourney, CogView-3, vision模型 | |||

| - ✅ **丰富插件:** 支持个性化插件扩展,已实现多角色切换、文字冒险、敏感词过滤、聊天记录总结、文档总结和对话、联网搜索等插件 | |||

| - ✅ **知识库:** 通过上传知识库文件自定义专属机器人,可作为数字分身、智能客服、私域助手使用,基于 [LinkAI](https://link-ai.tech) 实现 | |||

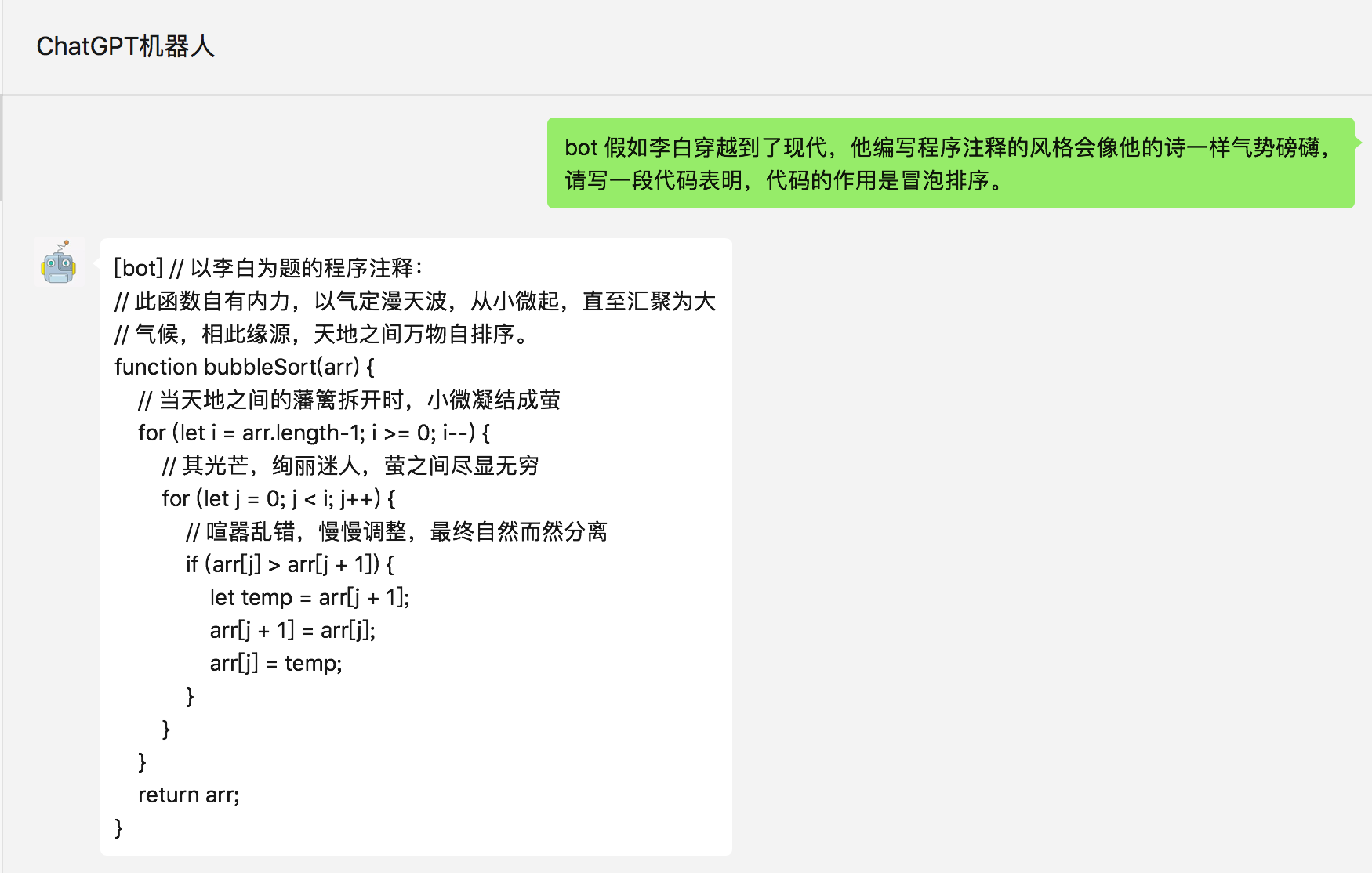

| # 演示 | |||

| ## 声明 | |||

| https://github.com/zhayujie/chatgpt-on-wechat/assets/26161723/d5154020-36e3-41db-8706-40ce9f3f1b1e | |||

| 1. 本项目遵循 [MIT开源协议](/LICENSE),仅用于技术研究和学习,使用本项目时需遵守所在地法律法规、相关政策以及企业章程,禁止用于任何违法或侵犯他人权益的行为 | |||

| 2. 境内使用该项目时,请使用国内厂商的大模型服务,并进行必要的内容安全审核及过滤 | |||

| 3. 本项目主要接入协同办公平台,请使用公众号、企微自建应用、钉钉、飞书等接入通道,其他通道为历史产物,已不再维护 | |||

| 4. 任何个人、团队和企业,无论以何种方式使用该项目、对何对象提供服务,所产生的一切后果,本项目均不承担任何责任 | |||

| Demo made by [Visionn](https://www.wangpc.cc/) | |||

| ## 社区 | |||

| # 商业支持 | |||

| 添加小助手微信加入开源项目交流群: | |||

| <img width="160" src="https://img-1317903499.cos.ap-guangzhou.myqcloud.com/docs/open-community.png"> | |||

| <br> | |||

| > 我们还提供企业级的 **AI应用平台**,包含知识库、Agent插件、应用管理等能力,支持多平台聚合的应用接入、客户端管理、对话管理,以及提供 | |||

| SaaS服务、私有化部署、稳定托管接入 等多种模式。 | |||

| # 企业服务 | |||

| <a href="https://link-ai.tech" target="_blank"><img width="800" src="https://cdn.link-ai.tech/image/link-ai-intro.jpg"></a> | |||

| > [LinkAI](https://link-ai.tech/) 是面向企业和开发者的一站式AI应用平台,聚合多模态大模型、知识库、Agent 插件、工作流等能力,支持一键接入主流平台并进行管理,支持SaaS、私有化部署多种模式。 | |||

| > | |||

| > 目前已在私域运营、智能客服、企业效率助手等场景积累了丰富的 AI 解决方案, 在电商、文教、健康、新消费等各行业沉淀了 AI 落地的最佳实践,致力于打造助力中小企业拥抱 AI 的一站式平台。 | |||

| 企业服务和商用咨询可联系产品顾问: | |||

| > LinkAI 目前 已在私域运营、智能客服、企业效率助手等场景积累了丰富的 AI 解决方案, 在电商、文教、健康、新消费、科技制造等各行业沉淀了大模型落地应用的最佳实践,致力于帮助更多企业和开发者拥抱 AI 生产力。 | |||

| <img width="240" src="https://img-1317903499.cos.ap-guangzhou.myqcloud.com/docs/product-manager-qrcode.jpg"> | |||

| **企业服务和产品咨询** 可联系产品顾问: | |||

| # 开源社区 | |||

| <img width="160" src="https://img-1317903499.cos.ap-guangzhou.myqcloud.com/docs/github-product-consult.png"> | |||

| 添加小助手微信加入开源项目交流群: | |||

| <br> | |||

| <img width="240" src="./docs/images/contact.jpg"> | |||

| # 🏷 更新日志 | |||

| # 更新日志 | |||

| >**2024.05.14:** [1.6.5版本](https://github.com/zhayujie/chatgpt-on-wechat/releases/tag/1.6.5),新增 gpt-4o 模型支持 | |||

| >**2024.04.26:** [1.6.0版本](https://github.com/zhayujie/chatgpt-on-wechat/releases/tag/1.6.0),新增 Kimi 接入、gpt-4-turbo版本升级、文件总结和语音识别问题修复 | |||

| >**2024.03.26:** [1.5.8版本](https://github.com/zhayujie/chatgpt-on-wechat/releases/tag/1.5.8) 和 [1.5.7版本](https://github.com/zhayujie/chatgpt-on-wechat/releases/tag/1.5.7),新增 GLM-4、Claude-3 模型,edge-tts 语音支持 | |||

| @@ -55,11 +66,13 @@ SaaS服务、私有化部署、稳定托管接入 等多种模式。 | |||

| 更早更新日志查看: [归档日志](/docs/version/old-version.md) | |||

| # 快速开始 | |||

| <br> | |||

| # 🚀 快速开始 | |||

| 快速开始文档:[项目搭建文档](https://docs.link-ai.tech/cow/quick-start) | |||

| 快速开始详细文档:[项目搭建文档](https://docs.link-ai.tech/cow/quick-start) | |||

| ## 准备 | |||

| ## 一、准备 | |||

| ### 1. 账号注册 | |||

| @@ -98,7 +111,7 @@ pip3 install -r requirements-optional.txt | |||

| ``` | |||

| > 如果某项依赖安装失败可注释掉对应的行再继续 | |||

| ## 配置 | |||

| ## 二、配置 | |||

| 配置文件的模板在根目录的`config-template.json`中,需复制该模板创建最终生效的 `config.json` 文件: | |||

| @@ -106,14 +119,13 @@ pip3 install -r requirements-optional.txt | |||

| cp config-template.json config.json | |||

| ``` | |||

| 然后在`config.json`中填入配置,以下是对默认配置的说明,可根据需要进行自定义修改(请去掉注释): | |||

| 然后在`config.json`中填入配置,以下是对默认配置的说明,可根据需要进行自定义修改(注意实际使用时请去掉注释,保证JSON格式的完整): | |||

| ```bash | |||

| # config.json文件内容示例 | |||

| { | |||

| "open_ai_api_key": "YOUR API KEY", # 填入上面创建的 OpenAI API KEY | |||

| "model": "gpt-3.5-turbo", # 模型名称, 支持 gpt-3.5-turbo, gpt-3.5-turbo-16k, gpt-4, wenxin, xunfei, claude-3-opus-20240229 | |||

| "claude_api_key":"YOUR API KEY" # 如果选用claude3模型的话,配置这个key,同时如想使用生图,语音等功能,仍需配置open_ai_api_key | |||

| "model": "gpt-3.5-turbo", # 模型名称, 支持 gpt-3.5-turbo, gpt-4, gpt-4-turbo, wenxin, xunfei, glm-4, claude-3-haiku, moonshot | |||

| "open_ai_api_key": "YOUR API KEY", # 如果使用openAI模型则填入上面创建的 OpenAI API KEY | |||

| "proxy": "", # 代理客户端的ip和端口,国内环境开启代理的需要填写该项,如 "127.0.0.1:7890" | |||

| "single_chat_prefix": ["bot", "@bot"], # 私聊时文本需要包含该前缀才能触发机器人回复 | |||

| "single_chat_reply_prefix": "[bot] ", # 私聊时自动回复的前缀,用于区分真人 | |||

| @@ -124,10 +136,8 @@ pip3 install -r requirements-optional.txt | |||

| "conversation_max_tokens": 1000, # 支持上下文记忆的最多字符数 | |||

| "speech_recognition": false, # 是否开启语音识别 | |||

| "group_speech_recognition": false, # 是否开启群组语音识别 | |||

| "use_azure_chatgpt": false, # 是否使用Azure ChatGPT service代替openai ChatGPT service. 当设置为true时需要设置 open_ai_api_base,如 https://xxx.openai.azure.com/ | |||

| "azure_deployment_id": "", # 采用Azure ChatGPT时,模型部署名称 | |||

| "azure_api_version": "", # 采用Azure ChatGPT时,API版本 | |||

| "character_desc": "你是ChatGPT, 一个由OpenAI训练的大型语言模型, 你旨在回答并解决人们的任何问题,并且可以使用多种语言与人交流。", # 人格描述 | |||

| "voice_reply_voice": false, # 是否使用语音回复语音 | |||

| "character_desc": "你是基于大语言模型的AI智能助手,旨在回答并解决人们的任何问题,并且可以使用多种语言与人交流。", # 人格描述 | |||

| # 订阅消息,公众号和企业微信channel中请填写,当被订阅时会自动回复,可使用特殊占位符。目前支持的占位符有{trigger_prefix},在程序中它会自动替换成bot的触发词。 | |||

| "subscribe_msg": "感谢您的关注!\n这里是ChatGPT,可以自由对话。\n支持语音对话。\n支持图片输出,画字开头的消息将按要求创作图片。\n支持角色扮演和文字冒险等丰富插件。\n输入{trigger_prefix}#help 查看详细指令。", | |||

| "use_linkai": false, # 是否使用LinkAI接口,默认关闭,开启后可国内访问,使用知识库和MJ | |||

| @@ -153,11 +163,11 @@ pip3 install -r requirements-optional.txt | |||

| + 添加 `"speech_recognition": true` 将开启语音识别,默认使用openai的whisper模型识别为文字,同时以文字回复,该参数仅支持私聊 (注意由于语音消息无法匹配前缀,一旦开启将对所有语音自动回复,支持语音触发画图); | |||

| + 添加 `"group_speech_recognition": true` 将开启群组语音识别,默认使用openai的whisper模型识别为文字,同时以文字回复,参数仅支持群聊 (会匹配group_chat_prefix和group_chat_keyword, 支持语音触发画图); | |||

| + 添加 `"voice_reply_voice": true` 将开启语音回复语音(同时作用于私聊和群聊),但是需要配置对应语音合成平台的key,由于itchat协议的限制,只能发送语音mp3文件,若使用wechaty则回复的是微信语音。 | |||

| + 添加 `"voice_reply_voice": true` 将开启语音回复语音(同时作用于私聊和群聊) | |||

| **4.其他配置** | |||

| + `model`: 模型名称,目前支持 `gpt-3.5-turbo`, `text-davinci-003`, `gpt-4`, `gpt-4-32k`, `wenxin` , `claude` , `xunfei`(其中gpt-4 api暂未完全开放,申请通过后可使用) | |||

| + `model`: 模型名称,目前支持 `gpt-3.5-turbo`, `gpt-4o`, `gpt-4-turbo`, `gpt-4`, `wenxin` , `claude` , `gemini`, `glm-4`, `xunfei`, `moonshot` | |||

| + `temperature`,`frequency_penalty`,`presence_penalty`: Chat API接口参数,详情参考[OpenAI官方文档。](https://platform.openai.com/docs/api-reference/chat) | |||

| + `proxy`:由于目前 `openai` 接口国内无法访问,需配置代理客户端的地址,详情参考 [#351](https://github.com/zhayujie/chatgpt-on-wechat/issues/351) | |||

| + 对于图像生成,在满足个人或群组触发条件外,还需要额外的关键词前缀来触发,对应配置 `image_create_prefix ` | |||

| @@ -165,7 +175,7 @@ pip3 install -r requirements-optional.txt | |||

| + `conversation_max_tokens`:表示能够记忆的上下文最大字数(一问一答为一组对话,如果累积的对话字数超出限制,就会优先移除最早的一组对话) | |||

| + `rate_limit_chatgpt`,`rate_limit_dalle`:每分钟最高问答速率、画图速率,超速后排队按序处理。 | |||

| + `clear_memory_commands`: 对话内指令,主动清空前文记忆,字符串数组可自定义指令别名。 | |||

| + `hot_reload`: 程序退出后,暂存微信扫码状态,默认关闭。 | |||

| + `hot_reload`: 程序退出后,暂存等于状态,默认关闭。 | |||

| + `character_desc` 配置中保存着你对机器人说的一段话,他会记住这段话并作为他的设定,你可以为他定制任何人格 (关于会话上下文的更多内容参考该 [issue](https://github.com/zhayujie/chatgpt-on-wechat/issues/43)) | |||

| + `subscribe_msg`:订阅消息,公众号和企业微信channel中请填写,当被订阅时会自动回复, 可使用特殊占位符。目前支持的占位符有{trigger_prefix},在程序中它会自动替换成bot的触发词。 | |||

| @@ -177,7 +187,7 @@ pip3 install -r requirements-optional.txt | |||

| **本说明文档可能会未及时更新,当前所有可选的配置项均在该[`config.py`](https://github.com/zhayujie/chatgpt-on-wechat/blob/master/config.py)中列出。** | |||

| ## 运行 | |||

| ## 三、运行 | |||

| ### 1.本地运行 | |||

| @@ -187,7 +197,7 @@ pip3 install -r requirements-optional.txt | |||

| python3 app.py # windows环境下该命令通常为 python app.py | |||

| ``` | |||

| 终端输出二维码后,使用微信进行扫码,当输出 "Start auto replying" 时表示自动回复程序已经成功运行了(注意:用于登录的微信需要在支付处已完成实名认证)。扫码登录后你的账号就成为机器人了,可以在手机端通过配置的关键词触发自动回复 (任意好友发送消息给你,或是自己发消息给好友),参考[#142](https://github.com/zhayujie/chatgpt-on-wechat/issues/142)。 | |||

| 终端输出二维码后,进行扫码登录,当输出 "Start auto replying" 时表示自动回复程序已经成功运行了(注意:用于登录的账号需要在支付处已完成实名认证)。扫码登录后你的账号就成为机器人了,可以在手机端通过配置的关键词触发自动回复 (任意好友发送消息给你,或是自己发消息给好友),参考[#142](https://github.com/zhayujie/chatgpt-on-wechat/issues/142)。 | |||

| ### 2.服务器部署 | |||

| @@ -209,7 +219,7 @@ nohup python3 app.py & tail -f nohup.out # 在后台运行程序并通 | |||

| > 前提是需要安装好 `docker` 及 `docker-compose`,安装成功的表现是执行 `docker -v` 和 `docker-compose version` (或 docker compose version) 可以查看到版本号,可前往 [docker官网](https://docs.docker.com/engine/install/) 进行下载。 | |||

| #### (1) 下载 docker-compose.yml 文件 | |||

| **(1) 下载 docker-compose.yml 文件** | |||

| ```bash | |||

| wget https://open-1317903499.cos.ap-guangzhou.myqcloud.com/docker-compose.yml | |||

| @@ -217,7 +227,7 @@ wget https://open-1317903499.cos.ap-guangzhou.myqcloud.com/docker-compose.yml | |||

| 下载完成后打开 `docker-compose.yml` 修改所需配置,如 `OPEN_AI_API_KEY` 和 `GROUP_NAME_WHITE_LIST` 等。 | |||

| #### (2) 启动容器 | |||

| **(2) 启动容器** | |||

| 在 `docker-compose.yml` 所在目录下执行以下命令启动容器: | |||

| @@ -238,7 +248,7 @@ sudo docker compose up -d | |||

| sudo docker logs -f chatgpt-on-wechat | |||

| ``` | |||

| #### (3) 插件使用 | |||

| **(3) 插件使用** | |||

| 如果需要在docker容器中修改插件配置,可通过挂载的方式完成,将 [插件配置文件](https://github.com/zhayujie/chatgpt-on-wechat/blob/master/plugins/config.json.template) | |||

| 重命名为 `config.json`,放置于 `docker-compose.yml` 相同目录下,并在 `docker-compose.yml` 中的 `chatgpt-on-wechat` 部分下添加 `volumes` 映射: | |||

| @@ -260,16 +270,22 @@ volumes: | |||

| [](https://railway.app/template/qApznZ?referralCode=RC3znh) | |||

| ## 常见问题 | |||

| <br> | |||

| # 🔎 常见问题 | |||

| FAQs: <https://github.com/zhayujie/chatgpt-on-wechat/wiki/FAQs> | |||

| 或直接在线咨询 [项目小助手](https://link-ai.tech/app/Kv2fXJcH) (beta版本,语料完善中,回复仅供参考) | |||

| 或直接在线咨询 [项目小助手](https://link-ai.tech/app/Kv2fXJcH) (语料持续完善中,回复仅供参考) | |||

| ## 开发 | |||

| # 🛠️ 开发 | |||

| 欢迎接入更多应用,参考 [Terminal代码](https://github.com/zhayujie/chatgpt-on-wechat/blob/master/channel/terminal/terminal_channel.py) 实现接收和发送消息逻辑即可接入。 同时欢迎增加新的插件,参考 [插件说明文档](https://github.com/zhayujie/chatgpt-on-wechat/tree/master/plugins)。 | |||

| ## 联系 | |||

| # ✉ 联系 | |||

| 欢迎提交PR、Issues,以及Star支持一下。程序运行遇到问题可以查看 [常见问题列表](https://github.com/zhayujie/chatgpt-on-wechat/wiki/FAQs) ,其次前往 [Issues](https://github.com/zhayujie/chatgpt-on-wechat/issues) 中搜索。个人开发者可加入开源交流群参与更多讨论,企业用户可联系[产品顾问](https://img-1317903499.cos.ap-guangzhou.myqcloud.com/docs/product-manager-qrcode.jpg)咨询。 | |||

| # 🌟 贡献者 | |||

|  | |||

+ 7

- 1

bot/bot_factory.py

Visa fil

| @@ -50,7 +50,9 @@ def create_bot(bot_type): | |||

| elif bot_type == const.QWEN: | |||

| from bot.ali.ali_qwen_bot import AliQwenBot | |||

| return AliQwenBot() | |||

| elif bot_type == const.QWEN_DASHSCOPE: | |||

| from bot.dashscope.dashscope_bot import DashscopeBot | |||

| return DashscopeBot() | |||

| elif bot_type == const.GEMINI: | |||

| from bot.gemini.google_gemini_bot import GoogleGeminiBot | |||

| return GoogleGeminiBot() | |||

| @@ -59,5 +61,9 @@ def create_bot(bot_type): | |||

| from bot.zhipuai.zhipuai_bot import ZHIPUAIBot | |||

| return ZHIPUAIBot() | |||

| elif bot_type == const.MOONSHOT: | |||

| from bot.moonshot.moonshot_bot import MoonshotBot | |||

| return MoonshotBot() | |||

| raise RuntimeError | |||

+ 3

- 2

bot/chatgpt/chat_gpt_session.py

Visa fil

| @@ -62,11 +62,12 @@ def num_tokens_from_messages(messages, model): | |||

| import tiktoken | |||

| if model in ["gpt-3.5-turbo-0301", "gpt-35-turbo", "gpt-3.5-turbo-1106", "moonshot"]: | |||

| if model in ["gpt-3.5-turbo-0301", "gpt-35-turbo", "gpt-3.5-turbo-1106", "moonshot", const.LINKAI_35]: | |||

| return num_tokens_from_messages(messages, model="gpt-3.5-turbo") | |||

| elif model in ["gpt-4-0314", "gpt-4-0613", "gpt-4-32k", "gpt-4-32k-0613", "gpt-3.5-turbo-0613", | |||

| "gpt-3.5-turbo-16k", "gpt-3.5-turbo-16k-0613", "gpt-35-turbo-16k", "gpt-4-turbo-preview", | |||

| "gpt-4-1106-preview", const.GPT4_TURBO_PREVIEW, const.GPT4_VISION_PREVIEW]: | |||

| "gpt-4-1106-preview", const.GPT4_TURBO_PREVIEW, const.GPT4_VISION_PREVIEW, const.GPT4_TURBO_01_25, | |||

| const.GPT_4o, const.LINKAI_4o, const.LINKAI_4_TURBO]: | |||

| return num_tokens_from_messages(messages, model="gpt-4") | |||

| elif model.startswith("claude-3"): | |||

| return num_tokens_from_messages(messages, model="gpt-3.5-turbo") | |||

+ 117

- 0

bot/dashscope/dashscope_bot.py

Visa fil

| @@ -0,0 +1,117 @@ | |||

| # encoding:utf-8 | |||

| from bot.bot import Bot | |||

| from bot.session_manager import SessionManager | |||

| from bridge.context import ContextType | |||

| from bridge.reply import Reply, ReplyType | |||

| from common.log import logger | |||

| from config import conf, load_config | |||

| from .dashscope_session import DashscopeSession | |||

| import os | |||

| import dashscope | |||

| from http import HTTPStatus | |||

| dashscope_models = { | |||

| "qwen-turbo": dashscope.Generation.Models.qwen_turbo, | |||

| "qwen-plus": dashscope.Generation.Models.qwen_plus, | |||

| "qwen-max": dashscope.Generation.Models.qwen_max, | |||

| "qwen-bailian-v1": dashscope.Generation.Models.bailian_v1 | |||

| } | |||

| # ZhipuAI对话模型API | |||

| class DashscopeBot(Bot): | |||

| def __init__(self): | |||

| super().__init__() | |||

| self.sessions = SessionManager(DashscopeSession, model=conf().get("model") or "qwen-plus") | |||

| self.model_name = conf().get("model") or "qwen-plus" | |||

| self.api_key = conf().get("dashscope_api_key") | |||

| os.environ["DASHSCOPE_API_KEY"] = self.api_key | |||

| self.client = dashscope.Generation | |||

| def reply(self, query, context=None): | |||

| # acquire reply content | |||

| if context.type == ContextType.TEXT: | |||

| logger.info("[DASHSCOPE] query={}".format(query)) | |||

| session_id = context["session_id"] | |||

| reply = None | |||

| clear_memory_commands = conf().get("clear_memory_commands", ["#清除记忆"]) | |||

| if query in clear_memory_commands: | |||

| self.sessions.clear_session(session_id) | |||

| reply = Reply(ReplyType.INFO, "记忆已清除") | |||

| elif query == "#清除所有": | |||

| self.sessions.clear_all_session() | |||

| reply = Reply(ReplyType.INFO, "所有人记忆已清除") | |||

| elif query == "#更新配置": | |||

| load_config() | |||

| reply = Reply(ReplyType.INFO, "配置已更新") | |||

| if reply: | |||

| return reply | |||

| session = self.sessions.session_query(query, session_id) | |||

| logger.debug("[DASHSCOPE] session query={}".format(session.messages)) | |||

| reply_content = self.reply_text(session) | |||

| logger.debug( | |||

| "[DASHSCOPE] new_query={}, session_id={}, reply_cont={}, completion_tokens={}".format( | |||

| session.messages, | |||

| session_id, | |||

| reply_content["content"], | |||

| reply_content["completion_tokens"], | |||

| ) | |||

| ) | |||

| if reply_content["completion_tokens"] == 0 and len(reply_content["content"]) > 0: | |||

| reply = Reply(ReplyType.ERROR, reply_content["content"]) | |||

| elif reply_content["completion_tokens"] > 0: | |||

| self.sessions.session_reply(reply_content["content"], session_id, reply_content["total_tokens"]) | |||

| reply = Reply(ReplyType.TEXT, reply_content["content"]) | |||

| else: | |||

| reply = Reply(ReplyType.ERROR, reply_content["content"]) | |||

| logger.debug("[DASHSCOPE] reply {} used 0 tokens.".format(reply_content)) | |||

| return reply | |||

| else: | |||

| reply = Reply(ReplyType.ERROR, "Bot不支持处理{}类型的消息".format(context.type)) | |||

| return reply | |||

| def reply_text(self, session: DashscopeSession, retry_count=0) -> dict: | |||

| """ | |||

| call openai's ChatCompletion to get the answer | |||

| :param session: a conversation session | |||

| :param session_id: session id | |||

| :param retry_count: retry count | |||

| :return: {} | |||

| """ | |||

| try: | |||

| dashscope.api_key = self.api_key | |||

| response = self.client.call( | |||

| dashscope_models[self.model_name], | |||

| messages=session.messages, | |||

| result_format="message" | |||

| ) | |||

| if response.status_code == HTTPStatus.OK: | |||

| content = response.output.choices[0]["message"]["content"] | |||

| return { | |||

| "total_tokens": response.usage["total_tokens"], | |||

| "completion_tokens": response.usage["output_tokens"], | |||

| "content": content, | |||

| } | |||

| else: | |||

| logger.error('Request id: %s, Status code: %s, error code: %s, error message: %s' % ( | |||

| response.request_id, response.status_code, | |||

| response.code, response.message | |||

| )) | |||

| result = {"completion_tokens": 0, "content": "我现在有点累了,等会再来吧"} | |||

| need_retry = retry_count < 2 | |||

| result = {"completion_tokens": 0, "content": "我现在有点累了,等会再来吧"} | |||

| if need_retry: | |||

| return self.reply_text(session, retry_count + 1) | |||

| else: | |||

| return result | |||

| except Exception as e: | |||

| logger.exception(e) | |||

| need_retry = retry_count < 2 | |||

| result = {"completion_tokens": 0, "content": "我现在有点累了,等会再来吧"} | |||

| if need_retry: | |||

| return self.reply_text(session, retry_count + 1) | |||

| else: | |||

| return result | |||

+ 51

- 0

bot/dashscope/dashscope_session.py

Visa fil

| @@ -0,0 +1,51 @@ | |||

| from bot.session_manager import Session | |||

| from common.log import logger | |||

| class DashscopeSession(Session): | |||

| def __init__(self, session_id, system_prompt=None, model="qwen-turbo"): | |||

| super().__init__(session_id) | |||

| self.reset() | |||

| def discard_exceeding(self, max_tokens, cur_tokens=None): | |||

| precise = True | |||

| try: | |||

| cur_tokens = self.calc_tokens() | |||

| except Exception as e: | |||

| precise = False | |||

| if cur_tokens is None: | |||

| raise e | |||

| logger.debug("Exception when counting tokens precisely for query: {}".format(e)) | |||

| while cur_tokens > max_tokens: | |||

| if len(self.messages) > 2: | |||

| self.messages.pop(1) | |||

| elif len(self.messages) == 2 and self.messages[1]["role"] == "assistant": | |||

| self.messages.pop(1) | |||

| if precise: | |||

| cur_tokens = self.calc_tokens() | |||

| else: | |||

| cur_tokens = cur_tokens - max_tokens | |||

| break | |||

| elif len(self.messages) == 2 and self.messages[1]["role"] == "user": | |||

| logger.warn("user message exceed max_tokens. total_tokens={}".format(cur_tokens)) | |||

| break | |||

| else: | |||

| logger.debug("max_tokens={}, total_tokens={}, len(messages)={}".format(max_tokens, cur_tokens, | |||

| len(self.messages))) | |||

| break | |||

| if precise: | |||

| cur_tokens = self.calc_tokens() | |||

| else: | |||

| cur_tokens = cur_tokens - max_tokens | |||

| return cur_tokens | |||

| def calc_tokens(self): | |||

| return num_tokens_from_messages(self.messages) | |||

| def num_tokens_from_messages(messages): | |||

| # 只是大概,具体计算规则:https://help.aliyun.com/zh/dashscope/developer-reference/token-api?spm=a2c4g.11186623.0.0.4d8b12b0BkP3K9 | |||

| tokens = 0 | |||

| for msg in messages: | |||

| tokens += len(msg["content"]) | |||

| return tokens | |||

+ 4

- 4

bot/linkai/link_ai_bot.py

Visa fil

| @@ -122,7 +122,7 @@ class LinkAIBot(Bot): | |||

| headers = {"Authorization": "Bearer " + linkai_api_key} | |||

| # do http request | |||

| base_url = conf().get("linkai_api_base", "https://api.link-ai.chat") | |||

| base_url = conf().get("linkai_api_base", "https://api.link-ai.tech") | |||

| res = requests.post(url=base_url + "/v1/chat/completions", json=body, headers=headers, | |||

| timeout=conf().get("request_timeout", 180)) | |||

| if res.status_code == 200: | |||

| @@ -261,7 +261,7 @@ class LinkAIBot(Bot): | |||

| headers = {"Authorization": "Bearer " + conf().get("linkai_api_key")} | |||

| # do http request | |||

| base_url = conf().get("linkai_api_base", "https://api.link-ai.chat") | |||

| base_url = conf().get("linkai_api_base", "https://api.link-ai.tech") | |||

| res = requests.post(url=base_url + "/v1/chat/completions", json=body, headers=headers, | |||

| timeout=conf().get("request_timeout", 180)) | |||

| if res.status_code == 200: | |||

| @@ -304,7 +304,7 @@ class LinkAIBot(Bot): | |||

| def _fetch_app_info(self, app_code: str): | |||

| headers = {"Authorization": "Bearer " + conf().get("linkai_api_key")} | |||

| # do http request | |||

| base_url = conf().get("linkai_api_base", "https://api.link-ai.chat") | |||

| base_url = conf().get("linkai_api_base", "https://api.link-ai.tech") | |||

| params = {"app_code": app_code} | |||

| res = requests.get(url=base_url + "/v1/app/info", params=params, headers=headers, timeout=(5, 10)) | |||

| if res.status_code == 200: | |||

| @@ -326,7 +326,7 @@ class LinkAIBot(Bot): | |||

| "response_format": "url", | |||

| "img_proxy": conf().get("image_proxy") | |||

| } | |||

| url = conf().get("linkai_api_base", "https://api.link-ai.chat") + "/v1/images/generations" | |||

| url = conf().get("linkai_api_base", "https://api.link-ai.tech") + "/v1/images/generations" | |||

| res = requests.post(url, headers=headers, json=data, timeout=(5, 90)) | |||

| t2 = time.time() | |||

| image_url = res.json()["data"][0]["url"] | |||

+ 143

- 0

bot/moonshot/moonshot_bot.py

Visa fil

| @@ -0,0 +1,143 @@ | |||

| # encoding:utf-8 | |||

| import time | |||

| import openai | |||

| import openai.error | |||

| from bot.bot import Bot | |||

| from bot.session_manager import SessionManager | |||

| from bridge.context import ContextType | |||

| from bridge.reply import Reply, ReplyType | |||

| from common.log import logger | |||

| from config import conf, load_config | |||

| from .moonshot_session import MoonshotSession | |||

| import requests | |||

| # ZhipuAI对话模型API | |||

| class MoonshotBot(Bot): | |||

| def __init__(self): | |||

| super().__init__() | |||

| self.sessions = SessionManager(MoonshotSession, model=conf().get("model") or "moonshot-v1-128k") | |||

| self.args = { | |||

| "model": conf().get("model") or "moonshot-v1-128k", # 对话模型的名称 | |||

| "temperature": conf().get("temperature", 0.3), # 如果设置,值域须为 [0, 1] 我们推荐 0.3,以达到较合适的效果。 | |||

| "top_p": conf().get("top_p", 1.0), # 使用默认值 | |||

| } | |||

| self.api_key = conf().get("moonshot_api_key") | |||

| self.base_url = conf().get("moonshot_base_url", "https://api.moonshot.cn/v1/chat/completions") | |||

| def reply(self, query, context=None): | |||

| # acquire reply content | |||

| if context.type == ContextType.TEXT: | |||

| logger.info("[MOONSHOT_AI] query={}".format(query)) | |||

| session_id = context["session_id"] | |||

| reply = None | |||

| clear_memory_commands = conf().get("clear_memory_commands", ["#清除记忆"]) | |||

| if query in clear_memory_commands: | |||

| self.sessions.clear_session(session_id) | |||

| reply = Reply(ReplyType.INFO, "记忆已清除") | |||

| elif query == "#清除所有": | |||

| self.sessions.clear_all_session() | |||

| reply = Reply(ReplyType.INFO, "所有人记忆已清除") | |||

| elif query == "#更新配置": | |||

| load_config() | |||

| reply = Reply(ReplyType.INFO, "配置已更新") | |||

| if reply: | |||

| return reply | |||

| session = self.sessions.session_query(query, session_id) | |||

| logger.debug("[MOONSHOT_AI] session query={}".format(session.messages)) | |||

| model = context.get("moonshot_model") | |||

| new_args = self.args.copy() | |||

| if model: | |||

| new_args["model"] = model | |||

| # if context.get('stream'): | |||

| # # reply in stream | |||

| # return self.reply_text_stream(query, new_query, session_id) | |||

| reply_content = self.reply_text(session, args=new_args) | |||

| logger.debug( | |||

| "[MOONSHOT_AI] new_query={}, session_id={}, reply_cont={}, completion_tokens={}".format( | |||

| session.messages, | |||

| session_id, | |||

| reply_content["content"], | |||

| reply_content["completion_tokens"], | |||

| ) | |||

| ) | |||

| if reply_content["completion_tokens"] == 0 and len(reply_content["content"]) > 0: | |||

| reply = Reply(ReplyType.ERROR, reply_content["content"]) | |||

| elif reply_content["completion_tokens"] > 0: | |||

| self.sessions.session_reply(reply_content["content"], session_id, reply_content["total_tokens"]) | |||

| reply = Reply(ReplyType.TEXT, reply_content["content"]) | |||

| else: | |||

| reply = Reply(ReplyType.ERROR, reply_content["content"]) | |||

| logger.debug("[MOONSHOT_AI] reply {} used 0 tokens.".format(reply_content)) | |||

| return reply | |||

| else: | |||

| reply = Reply(ReplyType.ERROR, "Bot不支持处理{}类型的消息".format(context.type)) | |||

| return reply | |||

| def reply_text(self, session: MoonshotSession, args=None, retry_count=0) -> dict: | |||

| """ | |||

| call openai's ChatCompletion to get the answer | |||

| :param session: a conversation session | |||

| :param session_id: session id | |||

| :param retry_count: retry count | |||

| :return: {} | |||

| """ | |||

| try: | |||

| headers = { | |||

| "Content-Type": "application/json", | |||

| "Authorization": "Bearer " + self.api_key | |||

| } | |||

| body = args | |||

| body["messages"] = session.messages | |||

| # logger.debug("[MOONSHOT_AI] response={}".format(response)) | |||

| # logger.info("[MOONSHOT_AI] reply={}, total_tokens={}".format(response.choices[0]['message']['content'], response["usage"]["total_tokens"])) | |||

| res = requests.post( | |||

| self.base_url, | |||

| headers=headers, | |||

| json=body | |||

| ) | |||

| if res.status_code == 200: | |||

| response = res.json() | |||

| return { | |||

| "total_tokens": response["usage"]["total_tokens"], | |||

| "completion_tokens": response["usage"]["completion_tokens"], | |||

| "content": response["choices"][0]["message"]["content"] | |||

| } | |||

| else: | |||

| response = res.json() | |||

| error = response.get("error") | |||

| logger.error(f"[MOONSHOT_AI] chat failed, status_code={res.status_code}, " | |||

| f"msg={error.get('message')}, type={error.get('type')}") | |||

| result = {"completion_tokens": 0, "content": "提问太快啦,请休息一下再问我吧"} | |||

| need_retry = False | |||

| if res.status_code >= 500: | |||

| # server error, need retry | |||

| logger.warn(f"[MOONSHOT_AI] do retry, times={retry_count}") | |||

| need_retry = retry_count < 2 | |||

| elif res.status_code == 401: | |||

| result["content"] = "授权失败,请检查API Key是否正确" | |||

| elif res.status_code == 429: | |||

| result["content"] = "请求过于频繁,请稍后再试" | |||

| need_retry = retry_count < 2 | |||

| else: | |||

| need_retry = False | |||

| if need_retry: | |||

| time.sleep(3) | |||

| return self.reply_text(session, args, retry_count + 1) | |||

| else: | |||

| return result | |||

| except Exception as e: | |||

| logger.exception(e) | |||

| need_retry = retry_count < 2 | |||

| result = {"completion_tokens": 0, "content": "我现在有点累了,等会再来吧"} | |||

| if need_retry: | |||

| return self.reply_text(session, args, retry_count + 1) | |||

| else: | |||

| return result | |||

+ 51

- 0

bot/moonshot/moonshot_session.py

Visa fil

| @@ -0,0 +1,51 @@ | |||

| from bot.session_manager import Session | |||

| from common.log import logger | |||

| class MoonshotSession(Session): | |||

| def __init__(self, session_id, system_prompt=None, model="moonshot-v1-128k"): | |||

| super().__init__(session_id, system_prompt) | |||

| self.model = model | |||

| self.reset() | |||

| def discard_exceeding(self, max_tokens, cur_tokens=None): | |||

| precise = True | |||

| try: | |||

| cur_tokens = self.calc_tokens() | |||

| except Exception as e: | |||

| precise = False | |||

| if cur_tokens is None: | |||

| raise e | |||

| logger.debug("Exception when counting tokens precisely for query: {}".format(e)) | |||

| while cur_tokens > max_tokens: | |||

| if len(self.messages) > 2: | |||

| self.messages.pop(1) | |||

| elif len(self.messages) == 2 and self.messages[1]["role"] == "assistant": | |||

| self.messages.pop(1) | |||

| if precise: | |||

| cur_tokens = self.calc_tokens() | |||

| else: | |||

| cur_tokens = cur_tokens - max_tokens | |||

| break | |||

| elif len(self.messages) == 2 and self.messages[1]["role"] == "user": | |||

| logger.warn("user message exceed max_tokens. total_tokens={}".format(cur_tokens)) | |||

| break | |||

| else: | |||

| logger.debug("max_tokens={}, total_tokens={}, len(messages)={}".format(max_tokens, cur_tokens, | |||

| len(self.messages))) | |||

| break | |||

| if precise: | |||

| cur_tokens = self.calc_tokens() | |||

| else: | |||

| cur_tokens = cur_tokens - max_tokens | |||

| return cur_tokens | |||

| def calc_tokens(self): | |||

| return num_tokens_from_messages(self.messages, self.model) | |||

| def num_tokens_from_messages(messages, model): | |||

| tokens = 0 | |||

| for msg in messages: | |||

| tokens += len(msg["content"]) | |||

| return tokens | |||

+ 2

- 0

bot/zhipuai/zhipu_ai_session.py

Visa fil

| @@ -7,6 +7,8 @@ class ZhipuAISession(Session): | |||

| super().__init__(session_id, system_prompt) | |||

| self.model = model | |||

| self.reset() | |||

| if not system_prompt: | |||

| logger.warn("[ZhiPu] `character_desc` can not be empty") | |||

| def discard_exceeding(self, max_tokens, cur_tokens=None): | |||

| precise = True | |||

+ 9

- 2

bridge/bridge.py

Visa fil

| @@ -30,6 +30,8 @@ class Bridge(object): | |||

| self.btype["chat"] = const.XUNFEI | |||

| if model_type in [const.QWEN]: | |||

| self.btype["chat"] = const.QWEN | |||

| if model_type in [const.QWEN_TURBO, const.QWEN_PLUS, const.QWEN_MAX]: | |||

| self.btype["chat"] = const.QWEN_DASHSCOPE | |||

| if model_type in [const.GEMINI]: | |||

| self.btype["chat"] = const.GEMINI | |||

| if model_type in [const.ZHIPU_AI]: | |||

| @@ -37,17 +39,22 @@ class Bridge(object): | |||

| if model_type and model_type.startswith("claude-3"): | |||

| self.btype["chat"] = const.CLAUDEAPI | |||

| if model_type in ["claude"]: | |||

| self.btype["chat"] = const.CLAUDEAI | |||

| if model_type in ["moonshot-v1-8k", "moonshot-v1-32k", "moonshot-v1-128k"]: | |||

| self.btype["chat"] = const.MOONSHOT | |||

| if conf().get("use_linkai") and conf().get("linkai_api_key"): | |||

| self.btype["chat"] = const.LINKAI | |||

| if not conf().get("voice_to_text") or conf().get("voice_to_text") in ["openai"]: | |||

| self.btype["voice_to_text"] = const.LINKAI | |||

| if not conf().get("text_to_voice") or conf().get("text_to_voice") in ["openai", const.TTS_1, const.TTS_1_HD]: | |||

| self.btype["text_to_voice"] = const.LINKAI | |||

| if model_type in ["claude"]: | |||

| self.btype["chat"] = const.CLAUDEAI | |||

| self.bots = {} | |||

| self.chat_bots = {} | |||

| # 模型对应的接口 | |||

| def get_bot(self, typename): | |||

| if self.bots.get(typename) is None: | |||

+ 0

- 1

channel/chat_channel.py

Visa fil

| @@ -4,7 +4,6 @@ import threading | |||

| import time | |||

| from asyncio import CancelledError | |||

| from concurrent.futures import Future, ThreadPoolExecutor | |||

| from concurrent import futures | |||

| from bridge.context import * | |||

| from bridge.reply import * | |||

+ 1

- 1

channel/wechat/wechat_channel.py

Visa fil

| @@ -132,7 +132,7 @@ class WechatChannel(ChatChannel): | |||

| # start message listener | |||

| itchat.run() | |||

| except Exception as e: | |||

| logger.error(e) | |||

| logger.exception(e) | |||

| def exitCallback(self): | |||

| try: | |||

+ 16

- 3

common/const.py

Visa fil

| @@ -8,6 +8,12 @@ LINKAI = "linkai" | |||

| CLAUDEAI = "claude" | |||

| CLAUDEAPI= "claudeAPI" | |||

| QWEN = "qwen" | |||

| QWEN_DASHSCOPE = "dashscope" | |||

| QWEN_TURBO = "qwen-turbo" | |||

| QWEN_PLUS = "qwen-plus" | |||

| QWEN_MAX = "qwen-max" | |||

| GEMINI = "gemini" | |||

| ZHIPU_AI = "glm-4" | |||

| MOONSHOT = "moonshot" | |||

| @@ -17,14 +23,21 @@ MOONSHOT = "moonshot" | |||

| CLAUDE3 = "claude-3-opus-20240229" | |||

| GPT35 = "gpt-3.5-turbo" | |||

| GPT4 = "gpt-4" | |||

| GPT4_TURBO_PREVIEW = "gpt-4-0125-preview" | |||

| GPT_4o = "gpt-4o" | |||

| LINKAI_35 = "linkai-3.5" | |||

| LINKAI_4_TURBO = "linkai-4-turbo" | |||

| LINKAI_4o = "linkai-4o" | |||

| GPT4_TURBO_PREVIEW = "gpt-4-turbo-2024-04-09" | |||

| GPT4_TURBO_04_09 = "gpt-4-turbo-2024-04-09" | |||

| GPT4_TURBO_01_25 = "gpt-4-0125-preview" | |||

| GPT4_VISION_PREVIEW = "gpt-4-vision-preview" | |||

| WHISPER_1 = "whisper-1" | |||

| TTS_1 = "tts-1" | |||

| TTS_1_HD = "tts-1-hd" | |||

| MODEL_LIST = ["gpt-3.5-turbo", "gpt-3.5-turbo-16k", "gpt-4", "wenxin", "wenxin-4", "xunfei", "claude","claude-3-opus-20240229", "gpt-4-turbo", | |||

| "gpt-4-turbo-preview", "gpt-4-1106-preview", GPT4_TURBO_PREVIEW, QWEN, GEMINI, ZHIPU_AI, MOONSHOT] | |||

| MODEL_LIST = ["gpt-3.5-turbo", "gpt-3.5-turbo-16k", "gpt-4", "wenxin", "wenxin-4", "xunfei", "claude", "claude-3-opus-20240229", "gpt-4-turbo", | |||

| "gpt-4-turbo-preview", "gpt-4-1106-preview", GPT4_TURBO_PREVIEW, GPT4_TURBO_01_25, GPT_4o, QWEN, GEMINI, ZHIPU_AI, MOONSHOT, | |||

| QWEN_TURBO, QWEN_PLUS, QWEN_MAX, LINKAI_35, LINKAI_4_TURBO, LINKAI_4o] | |||

| # channel | |||

| FEISHU = "feishu" | |||

+ 14

- 3

common/linkai_client.py

Visa fil

| @@ -4,6 +4,7 @@ from common.log import logger | |||

| from linkai import LinkAIClient, PushMsg | |||

| from config import conf, pconf, plugin_config, available_setting | |||

| from plugins import PluginManager | |||

| import time | |||

| chat_client: LinkAIClient | |||

| @@ -44,7 +45,7 @@ class ChatClient(LinkAIClient): | |||

| elif reply_voice_mode == "always_reply_voice": | |||

| local_config["always_reply_voice"] = True | |||

| if config.get("admin_password") and plugin_config["Godcmd"]: | |||

| if config.get("admin_password") and plugin_config.get("Godcmd"): | |||

| plugin_config["Godcmd"]["password"] = config.get("admin_password") | |||

| PluginManager().instances["GODCMD"].reload() | |||

| @@ -55,13 +56,23 @@ class ChatClient(LinkAIClient): | |||

| pconf("linkai")["group_app_map"] = local_group_map | |||

| PluginManager().instances["LINKAI"].reload() | |||

| if config.get("text_to_image") and config.get("text_to_image") == "midjourney" and pconf("linkai"): | |||

| if pconf("linkai")["midjourney"]: | |||

| pconf("linkai")["midjourney"]["enabled"] = True | |||

| pconf("linkai")["midjourney"]["use_image_create_prefix"] = True | |||

| elif config.get("text_to_image") and config.get("text_to_image") in ["dall-e-2", "dall-e-3"]: | |||

| if pconf("linkai")["midjourney"]: | |||

| pconf("linkai")["midjourney"]["use_image_create_prefix"] = False | |||

| def start(channel): | |||

| global chat_client | |||

| chat_client = ChatClient(api_key=conf().get("linkai_api_key"), | |||

| host="link-ai.chat", channel=channel) | |||

| chat_client = ChatClient(api_key=conf().get("linkai_api_key"), host="", channel=channel) | |||

| chat_client.config = _build_config() | |||

| chat_client.start() | |||

| time.sleep(1.5) | |||

| if chat_client.client_id: | |||

| logger.info("[LinkAI] 可前往控制台进行线上登录和配置:https://link-ai.tech/console/clients") | |||

| def _build_config(): | |||

+ 1

- 1

config-template.json

Visa fil

| @@ -28,7 +28,7 @@ | |||

| "voice_reply_voice": false, | |||

| "conversation_max_tokens": 2500, | |||

| "expires_in_seconds": 3600, | |||

| "character_desc": "你是ChatGPT, 一个由OpenAI训练的大型语言模型, 你旨在回答并解决人们的任何问题,并且可以使用多种语言与人交流。", | |||

| "character_desc": "你是基于大语言模型的AI智能助手,旨在回答并解决人们的任何问题,并且可以使用多种语言与人交流。", | |||

| "temperature": 0.7, | |||

| "subscribe_msg": "感谢您的关注!\n这里是AI智能助手,可以自由对话。\n支持语音对话。\n支持图片输入。\n支持图片输出,画字开头的消息将按要求创作图片。\n支持tool、角色扮演和文字冒险等丰富的插件。\n输入{trigger_prefix}#help 查看详细指令。", | |||

| "use_linkai": false, | |||

+ 5

- 1

config.py

Visa fil

| @@ -82,6 +82,8 @@ available_setting = { | |||

| "qwen_agent_key": "", | |||

| "qwen_app_id": "", | |||

| "qwen_node_id": "", # 流程编排模型用到的id,如果没有用到qwen_node_id,请务必保持为空字符串 | |||

| # 阿里灵积模型api key | |||

| "dashscope_api_key": "", | |||

| # Google Gemini Api Key | |||

| "gemini_api_key": "", | |||

| # wework的通用配置 | |||

| @@ -162,11 +164,13 @@ available_setting = { | |||

| # 智谱AI 平台配置 | |||

| "zhipu_ai_api_key": "", | |||

| "zhipu_ai_api_base": "https://open.bigmodel.cn/api/paas/v4", | |||

| "moonshot_api_key": "", | |||

| "moonshot_base_url":"https://api.moonshot.cn/v1/chat/completions", | |||

| # LinkAI平台配置 | |||

| "use_linkai": False, | |||

| "linkai_api_key": "", | |||

| "linkai_app_code": "", | |||

| "linkai_api_base": "https://api.link-ai.chat", # linkAI服务地址,若国内无法访问或延迟较高可改为 https://api.link-ai.tech | |||

| "linkai_api_base": "https://api.link-ai.tech", # linkAI服务地址 | |||

| } | |||

Binär

docs/images/aigcopen.png

Visa fil

Binär

docs/images/group-chat-sample.jpg

Visa fil

Binär

docs/images/image-create-sample.jpg

Visa fil

Binär

docs/images/planet.jpg

Visa fil

Binär

docs/images/single-chat-sample.jpg

Visa fil

+ 1

- 1

docs/version/old-version.md

Visa fil

| @@ -8,6 +8,6 @@ | |||

| 2023.03.25: 支持插件化开发,目前已实现 多角色切换、文字冒险游戏、管理员指令、Stable Diffusion等插件,使用参考 #578。(contributed by @lanvent in #565) | |||

| 2023.03.09: 基于 whisper API(后续已接入更多的语音API服务) 实现对微信语音消息的解析和回复,添加配置项 "speech_recognition":true 即可启用,使用参考 #415。(contributed by wanggang1987 in #385) | |||

| 2023.03.09: 基于 whisper API(后续已接入更多的语音API服务) 实现对语音消息的解析和回复,添加配置项 "speech_recognition":true 即可启用,使用参考 #415。(contributed by wanggang1987 in #385) | |||

| 2023.02.09: 扫码登录存在账号限制风险,请谨慎使用,参考#58 | |||

+ 4

- 2

plugins/linkai/linkai.py

Visa fil

| @@ -9,6 +9,7 @@ from common.expired_dict import ExpiredDict | |||

| from common import const | |||

| import os | |||

| from .utils import Util | |||

| from config import plugin_config | |||

| @plugins.register( | |||

| @@ -69,7 +70,7 @@ class LinkAI(Plugin): | |||

| return | |||

| if (context.type == ContextType.SHARING and self._is_summary_open(context)) or \ | |||

| (context.type == ContextType.TEXT and LinkSummary().check_url(context.content)): | |||

| (context.type == ContextType.TEXT and self._is_summary_open(context) and LinkSummary().check_url(context.content)): | |||

| if not LinkSummary().check_url(context.content): | |||

| return | |||

| _send_info(e_context, "正在为你加速生成摘要,请稍后") | |||

| @@ -196,7 +197,7 @@ class LinkAI(Plugin): | |||

| if context.kwargs.get("isgroup") and not self.sum_config.get("group_enabled"): | |||

| return False | |||

| support_type = self.sum_config.get("type") or ["FILE", "SHARING"] | |||

| if context.type.name not in support_type: | |||

| if context.type.name not in support_type and context.type.name != "TEXT": | |||

| return False | |||

| return True | |||

| @@ -253,6 +254,7 @@ class LinkAI(Plugin): | |||

| plugin_conf = json.load(f) | |||

| plugin_conf["midjourney"]["enabled"] = False | |||

| plugin_conf["summary"]["enabled"] = False | |||

| plugin_config["linkai"] = plugin_conf | |||

| return plugin_conf | |||

| except Exception as e: | |||

| logger.exception(e) | |||

+ 1

- 1

plugins/linkai/midjourney.py

Visa fil

| @@ -68,7 +68,7 @@ class MJTask: | |||

| # midjourney bot | |||

| class MJBot: | |||

| def __init__(self, config): | |||

| self.base_url = conf().get("linkai_api_base", "https://api.link-ai.chat") + "/v1/img/midjourney" | |||

| self.base_url = conf().get("linkai_api_base", "https://api.link-ai.tech") + "/v1/img/midjourney" | |||

| self.headers = {"Authorization": "Bearer " + conf().get("linkai_api_key")} | |||

| self.config = config | |||

| self.tasks = {} | |||

+ 3

- 1

plugins/linkai/summary.py

Visa fil

| @@ -2,6 +2,7 @@ import requests | |||

| from config import conf | |||

| from common.log import logger | |||

| import os | |||

| import html | |||

| class LinkSummary: | |||

| @@ -18,6 +19,7 @@ class LinkSummary: | |||

| return self._parse_summary_res(res) | |||

| def summary_url(self, url: str): | |||

| url = html.unescape(url) | |||

| body = { | |||

| "url": url | |||

| } | |||

| @@ -59,7 +61,7 @@ class LinkSummary: | |||

| return None | |||

| def base_url(self): | |||

| return conf().get("linkai_api_base", "https://api.link-ai.chat") | |||

| return conf().get("linkai_api_base", "https://api.link-ai.tech") | |||

| def headers(self): | |||

| return {"Authorization": "Bearer " + conf().get("linkai_api_key")} | |||

+ 4

- 0

requirements-optional.txt

Visa fil

| @@ -10,6 +10,7 @@ azure-cognitiveservices-speech # azure voice | |||

| edge-tts # edge-tts | |||

| numpy<=1.24.2 | |||

| langid # language detect | |||

| elevenlabs==1.0.3 # elevenlabs TTS | |||

| #install plugin | |||

| dulwich | |||

| @@ -40,3 +41,6 @@ dingtalk_stream | |||

| # zhipuai | |||

| zhipuai>=2.0.1 | |||

| # tongyi qwen new sdk | |||

| dashscope | |||

+ 1

- 1

requirements.txt

Visa fil

| @@ -7,4 +7,4 @@ chardet>=5.1.0 | |||

| Pillow | |||

| pre-commit | |||

| web.py | |||

| linkai>=0.0.3.7 | |||

| linkai>=0.0.6.0 | |||

+ 1

- 1

voice/audio_convert.py

Visa fil

| @@ -6,7 +6,7 @@ from common.log import logger | |||

| try: | |||

| import pysilk | |||

| except ImportError: | |||

| logger.warn("import pysilk failed, wechaty voice message will not be supported.") | |||

| logger.debug("import pysilk failed, wechaty voice message will not be supported.") | |||

| from pydub import AudioSegment | |||

+ 6

- 7

voice/elevent/elevent_voice.py

Visa fil

| @@ -1,7 +1,7 @@ | |||

| import time | |||

| from elevenlabs import set_api_key,generate | |||

| from elevenlabs.client import ElevenLabs | |||

| from elevenlabs import save | |||

| from bridge.reply import Reply, ReplyType | |||

| from common.log import logger | |||

| from common.tmp_dir import TmpDir | |||

| @@ -9,7 +9,7 @@ from voice.voice import Voice | |||

| from config import conf | |||

| XI_API_KEY = conf().get("xi_api_key") | |||

| set_api_key(XI_API_KEY) | |||

| client = ElevenLabs(api_key=XI_API_KEY) | |||

| name = conf().get("xi_voice_id") | |||

| class ElevenLabsVoice(Voice): | |||

| @@ -21,13 +21,12 @@ class ElevenLabsVoice(Voice): | |||

| pass | |||

| def textToVoice(self, text): | |||

| audio = generate( | |||

| audio = client.generate( | |||

| text=text, | |||

| voice=name, | |||

| model='eleven_multilingual_v1' | |||

| model='eleven_multilingual_v2' | |||

| ) | |||

| fileName = TmpDir().path() + "reply-" + str(int(time.time())) + "-" + str(hash(text) & 0x7FFFFFFF) + ".mp3" | |||

| with open(fileName, "wb") as f: | |||

| f.write(audio) | |||

| save(audio, fileName) | |||

| logger.info("[ElevenLabs] textToVoice text={} voice file name={}".format(text, fileName)) | |||

| return Reply(ReplyType.VOICE, fileName) | |||

+ 2

- 2

voice/linkai/linkai_voice.py

Visa fil

| @@ -19,7 +19,7 @@ class LinkAIVoice(Voice): | |||

| def voiceToText(self, voice_file): | |||

| logger.debug("[LinkVoice] voice file name={}".format(voice_file)) | |||

| try: | |||

| url = conf().get("linkai_api_base", "https://api.link-ai.chat") + "/v1/audio/transcriptions" | |||

| url = conf().get("linkai_api_base", "https://api.link-ai.tech") + "/v1/audio/transcriptions" | |||

| headers = {"Authorization": "Bearer " + conf().get("linkai_api_key")} | |||

| model = None | |||

| if not conf().get("text_to_voice") or conf().get("voice_to_text") == "openai": | |||

| @@ -54,7 +54,7 @@ class LinkAIVoice(Voice): | |||

| def textToVoice(self, text): | |||

| try: | |||

| url = conf().get("linkai_api_base", "https://api.link-ai.chat") + "/v1/audio/speech" | |||

| url = conf().get("linkai_api_base", "https://api.link-ai.tech") + "/v1/audio/speech" | |||

| headers = {"Authorization": "Bearer " + conf().get("linkai_api_key")} | |||

| model = const.TTS_1 | |||

| if not conf().get("text_to_voice") or conf().get("text_to_voice") in ["openai", const.TTS_1, const.TTS_1_HD]: | |||

+ 15

- 2

voice/openai/openai_voice.py

Visa fil

| @@ -21,8 +21,21 @@ class OpenaiVoice(Voice): | |||

| logger.debug("[Openai] voice file name={}".format(voice_file)) | |||

| try: | |||

| file = open(voice_file, "rb") | |||

| result = openai.Audio.transcribe("whisper-1", file) | |||

| text = result["text"] | |||

| api_base = conf().get("open_ai_api_base") or "https://api.openai.com/v1" | |||

| url = f'{api_base}/audio/transcriptions' | |||

| headers = { | |||

| 'Authorization': 'Bearer ' + conf().get("open_ai_api_key"), | |||

| # 'Content-Type': 'multipart/form-data' # 加了会报错,不知道什么原因 | |||

| } | |||

| files = { | |||

| "file": file, | |||

| } | |||

| data = { | |||

| "model": "whisper-1", | |||

| } | |||

| response = requests.post(url, headers=headers, files=files, data=data) | |||

| response_data = response.json() | |||

| text = response_data['text'] | |||

| reply = Reply(ReplyType.TEXT, text) | |||

| logger.info("[Openai] voiceToText text={} voice file name={}".format(text, voice_file)) | |||

| except Exception as e: | |||

Laddar…